No, We Shouldn’t Ban Waymos Over a CatDec 12

on kitkat, the beloved sf feline who was run over by an autonomous vehicle, and jackie fielder, the pandering sf politician who now wants to ban the vehicles altogether

May 30, 2023

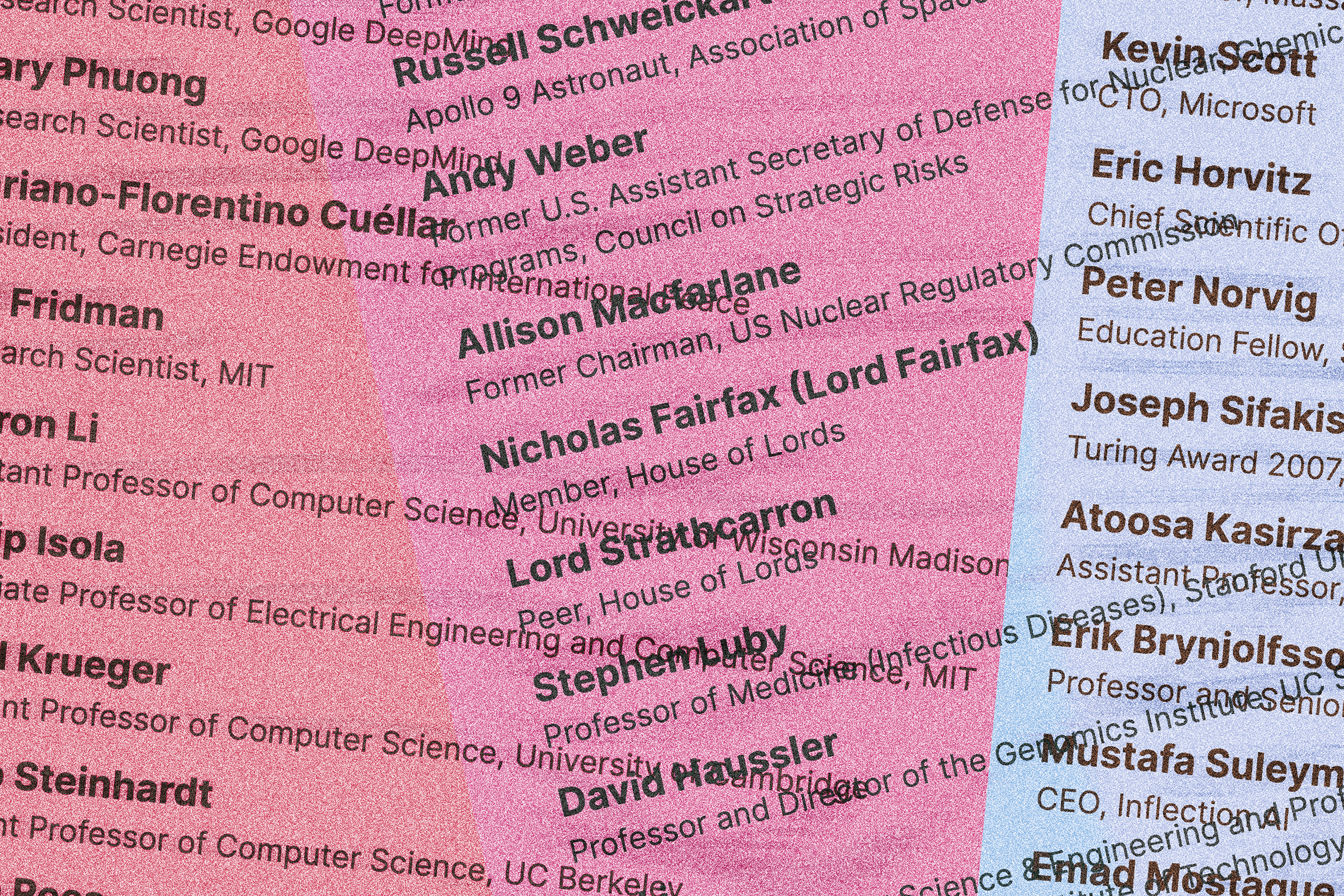

A short statement that reads “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war” was published by the Center for AI Safety today. Titled “Statement on AI Risk,” it’s signed by OpenAI CEO Sam Altman, CEO of Google Deepmind Demis Hassabis, and Anthropic CEO Dario Amodei, among many other notable figures and scientists in AI.

The letter comes on the heels of Altman’s appearance before Congress, where he suggested a AI licensing regime for models whose capacities may be risky, but only “as we head toward AGI.”