Tariffs Aren’t Enough: Only Automation Can Save American ManufacturingApr 14

tariffs might buy time, but only automation can win the war. the future of american industry — and national power — depends on it.

Trae Stephens“In a decel society, it is not enough to be non-decel, we must be anti-decel.”

---

Knife fight in a clown car. By yesterday, at least 700 of 770 OpenAI employees vowed to leave the company following the board’s incomprehensible decision to fire CEO Sam Altman, thus marking one of the great disasters in corporate governance history. The chaos catalyzed by this unimaginably stupid decision lasted several days, with no clear sense of who would win the AI game of thrones — the board, Sam, or a third, more mysterious thing (Satya Nadella) — and the only people who knew for sure why the board had flipped its suicide switch were the few responsible. But, from all available pieces of this clown car puzzle, the nut of the drama looks something like this: a handful of decel cult members hacked the non-profit entity clunkily responsible for OpenAI, then attempted to ritually castrate the company in order to save the world from the malevolent, all-powerful demonic entity promised in the sacred blog posts of “Rationalism,” their atheist faith. This provoked the most tech friendly, startup-loving optimists in the industry to root for Microsoft (!), which nobody found weird, while Microsoft publicly negotiated a pseudo acquisition of possibly the world’s most important company for approximately $0. In the end, with the help of several high-profile tech middlemen, Sam knifed the board, resumed control of OpenAI, and we all went home for Thanksgiving. But holy shit.

It has been a week of narcissism and groupthink unlike anything I’ve ever seen from tech. I’m told there are heroes in this story. Let’s see if we can find them.

Friday, dispensing with all familiar euphemisms typical of corporate assassinations (“exciting new endeavors,” “spending time with family”) OpenAI’s board updated its site with a message indicating, though not explicitly stating, they fired Sam for something grossly unethical, naming CTO Mira Murati interim CEO. In response, the industry promptly lost its shit, eliciting a kind of eulogy for Sam on Twitter glowing and heartbroken in degrees not achieved since the actual death of Steve Jobs, to whom Sam was immediately compared. That comparison only grew more pronounced over the weekend, as Sam announced he was starting a new company (?), and eventually, when it seemed he might come back to life, culminated in a comparison to Jesus Christ. The loophole that almost got him reinstated (the first time): Mira threatened to use her authority as interim CEO to rehire Sam, along with now-former board president Greg Brockman (who had at the height of this drama resigned, started his own company, been hired by Microsoft, and seemed to still be working at OpenAI). But Mira’s loyalty to Sam accelerated the board’s search for a successor. By Monday, she was also removed from power, and the frantic board tapped its third-choice CEO, Emmett Shear, the founder / former CEO of Twitch, and a committed thoughtful AI doomer. With plans for Sam’s new startup scratched I guess, Satya announced both Sam and Greg had taken jobs at Microsoft. There, the team would presumably rebuild OpenAI with help from the hundreds of furious OpenAI employees still demanding Sam’s return, and by Tuesday morning Microsoft’s CTO made the strategy explicit.

I’m recalling the scene from There Will Be Blood in which a humble farmer or whatever refuses to sell his oil-rich property to beast mode Daniel Day Lewis, who in turn buys the property adjacent to the man, and sucks up all his oil like a milkshake.

The board could never win, even if they ‘won.’ But finally, early Wednesday evening, lone, arguably sane board member Adam D’Angelo acquiesced to Sam’s return, and one of the women most responsible for this nightmare declared a job well done (she was now fired (and permanently disgraced (BYE))). An incoherent mess of a story. Childish. Embarrassing for everyone involved. I’m obsessed, of course. How did we get here?

Let’s talk about this board from atheist hell.

Initially, the hostile element of OpenAI’s board implied some nefarious behavior of Sam’s. But this increasingly seems to have either been a total invention, a gross exaggeration, or, ironically, an unfortunate miscommunication from the now famously communication-sensitive board.

“We can say definitively that the board’s decision was not made in response to malfeasance or anything related to our financial, business, safety, or security/privacy practices,” said OpenAI COO Brad Lightcap. “This was a breakdown in communication between Sam and the board … We still share your concerns about how the process has been handled, are working to resolve the situation, and will provide updates as we’re able.”

In other words, Sam was a bad texter so we altered the course of human history. Remember, these are the “Rationalists.”

Boards can fire executives, and it happens all the time. But even the most evil, blood-sucking board of a privately-held company is typically aligned, economically, with the company’s success. This was not, and is still not, the case at OpenAI, which was incorporated as a non-profit, before becoming kinda for-profit, and therefore enjoys an absolutely bananas corporate governance structure in which a non-profit entity controls a separate for-profit company that shares the non-profit’s name. That non-profit is controlled by a typical sort of clown-y non-profit board, no members of whom hold equity in the for-profit org, and almost all of whom, at the time of Sam’s firing, were themselves controlled, or at least heavily influenced by a decel cult.

John Loeber did a great job with the full board history, but our story really starts earlier this year, when 3 of 9 OpenAI board members either left citing conflicts of interest, or were removed. From here, board members could not agree on which seats to fill, which led to a bitter stalemate, and signaled the end. After Greg and Sam, the crazy malcontents remaining: Ilya Sutskever, Adam D’Angelo, Tasha McCauley, and Helen Toner. But at least three of them themselves have conflicts.

Until yesterday, the conflicts of Quora founder Adam D’Angelo attracted the most attention, given he is also the founder of an obviously competitive product, which Sam just weeks ago indicated would soon be made irrelevant by OpenAI. But Helen and Tasha are both wed to Open Philanthropy, financed by Facebook co-founder Dustin Moskovitz who — when not funding the campaigns of pro-crime district attorneys across the country — is likewise a major investor in OpenAI competitor Anthropic. This link, however so apparently tenuous, was actually a major point of contention between Sam and Helen (Confirmed Worst One ™), who, while sitting on OpenAI’s board, publicly attacked the company and championed Anthropic. Sam considered this something of an obvious problem (which, no shit), but didn’t have the power to remove the decel queen of darkness. This would prove unfortunate. According to the New York Times, after she helped coordinate Sam’s corporate assassination, she told OpenAI’s Chief Strategy officer the destruction of the company would fulfill her mission as a board member.

Very fucked up crazy person!

But from where does this crazy come?

Gather round, friends, it’s time to talk about Effective Altruism (just say no).

Ilya, the highly-respected mind behind much of the foundational innovation at OpenAI, has since apologized for his part in Sam’s removal. But, from reporting, he was possibly spooked over what he perceived to be the rapid, dangerous progress of OpenAI. Was Ilya taken in by the others, who perhaps argued their cases for nuking Sam in the language of Effective Altruism, while acting with either greedy or more nefarious motive? Or are all of these people actually just authentically part of a cult?

Tasha and Helen both sit on the advisory board of the British (ew) international Center for the Governance of AI (ew ew), while Tasha sits on the U.K. board (ewww) of the Effective Ventures Foundation, tying her to the Effective Altruism movement through the Centre for Effective Altruism. If we take these people at their word, they really do just think they’re saving the world.

EA is a philosophy of charity popular among self-described “Rationalists,” which purportedly employs an evidence-based approach to achieving the most good possible. Always considered slightly edgy in that typically ‘evidence-based approach to doing the most good possible’ did not include funding such socially popular things as welfare or very small schools in remote African villages, for example, the approach led EAs to focus on important, but wildly-underfunded causes: less lucrative research in math and biology, narrow but vital forms of political engagement (Moskovitz’s pro-crime stuff, I guess), a campaign to reduce the pain experienced by shrimp. The importance of navigating existential risk, however, has always been spoken of by EAs in almost sacred-sounding language. Over the years, this branch of focus overtook the others, and among the ex-risks in need of mitigation nothing ranked higher than humanity’s risk of extinction from Artificial General Intelligence. Fear of the Bad AI God became a kind of obsessive faith for atheists. The decels were born.

Critically, and separate from what they ostensibly believe, the decels are a small, insular group of people with its own culture, and groups of this kind are all beholden to the same irrational group psychology — even when they call themselves “Rationalist.” With no economic incentive in play, status dictates everything, and among decels few men have more status than the atheist Pope of Rationalism, “Bomb the Data Centers” Eliezer Yudkowsky.

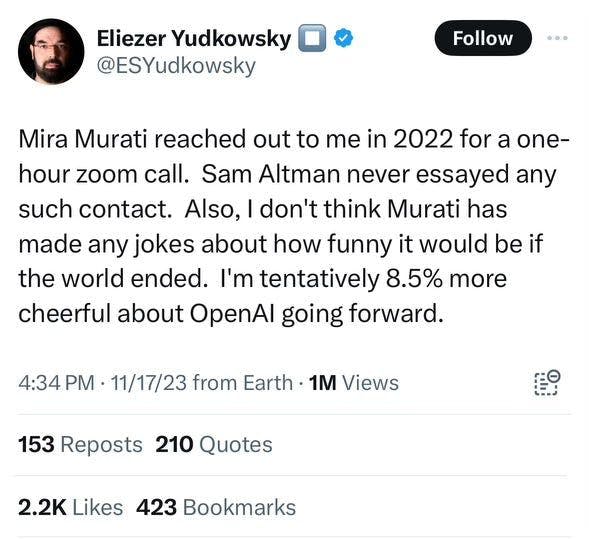

Here, with Mira momentarily appointed CEO, were Eliezer’s initial thoughts concerning Sam’s expulsion from the company he founded:

An unfathomable display of narcissism? Or did Eliezer believe he held influence over OpenAI’s board because he did hold influence over OpenAI’s board? Later, he even implied as much with a suspicious denial of the fact for which literally nobody asked. Given the board had no economic motive, it was only natural the members would seek status, but not from normie society. These board members only sought status from other EAs — again, a kind of doomsday cult that worships an all-powerful, evil AI god — the toxic effects of which were all compounded by this century’s endless thirst, from every human being too online, for attention.

Now what about Sam?

Well, he played this all perfectly. Sam has been building this company for a long time, but EAs have always been embedded in the AI community. From his vantage point, there was never any building OpenAI without their support. So off to church he went. At first, he balanced out the EAs’ religious fanaticism with more pragmatic board members. He aggressively assumed a pro-regulation stance in Washington to appease them. He took no equity in the company, and submitted to a non-profit governing body so nobody could call him greedy, and everyone would say that OpenAI was the purest of the AI projects — attracting all the best talent, which skewed, especially early on before the hype, wildly ideological. Sam’s only apparent flaw, which did prove nearly fatal, was losing control of the board composition. But was that ever a real risk? Sam is a successful CEO, and one of the most well-connected men in tech, now opposed by… who? A man easily framed as a jealous founder, a communist spy (who knows!), and Joseph Gordon-Levitt’s wife? The EAs were never going to win.

Now, if Sam is everything he says he is, we’ll start to see the censorship he’s presided over fade away, off will come the AI safety wheels, and Eliezer can scream at his god in the sky. Because, at this point, I doubt Sam will ever lose control again.

Which makes the groupthink that surrounded him a little bit concerning.

Following news of Sam’s ouster, the Valley immediately coalesced on one opinion, which, to a large extent, was the opinion I shared — fuck this crazy fucking board from atheist hell. But the loudness of it all left curiously little room for questions pertaining to everything from culpability (who actually architected this corporate structure?) to, among our more religiously minded anti-religious, the powerful technology that almost fell under the permanent and total control of a single corporate behemoth. What does an all-powerful, centralizing technology in the hands of Microsoft mean for censorship? What might this giant’s relationship with Washington, along with foreign regulators, mean for competition, and by extension further innovation? And why does nobody seem to care about this question?

Our own cult, perhaps?

As the industry unraveled into chaos, I spoke with a friend working on another well-known LLM. “I guess it’s decels vs Microsoft,” he said. “Which is insane.”

A question for the shitposting ‘utopian’ tech anons who tell us they’re building a god, but a good god: would this powerful machine really have been better off under the control of a giant, soul-crushing, hopelessly-centralized corporation, itself wed to the whims of our government, than it was under the control of a well-meaning “Rationalist” freak show? For what it’s worth, my sense is yeah, probably.

But why were we cheering?

-SOLANA

0 free articles left